Wednesday, October 21, 2020

Tensors

-- https://www.youtube.com/watch?v=VL4NC4f6m0w

- Tensors are Multi-Dimensional

- Tensors are higher extensions of matrices.

- Tensors to encode multi-dimensional data. Images - 3 dimensions Vidoes - 4 dimensions

- Tensors to encode higher order relationships and modalities.

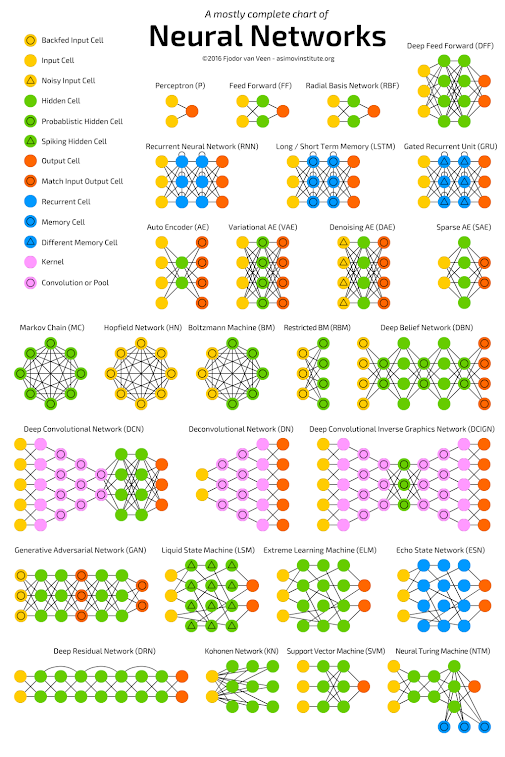

- Tensor algebra is richer than matrix algebra. Richer neural network architectures.

- More compact networks/better accuracy.

---------------------------------------------------

- Tensorly: Framework for Tensor Algebra

- Tensors contractions are a core primitive of multilinear algebra.

---------------------------------------------------

Why Tensors

- Statistical reasons

Incorporate higher order relationships in data.

Discover hidden topics (not possible with matrix methods)

- Computational reasons:

Tensor algebra is parallellizable like linear algebra

Faster than other algorithms for LDA.

Flexible: Training and inference decoupled

Guaranteed in theory to converge to global optimum.

---------------------------------------------------

Subscribe to:

Comments (Atom)