Tuesday, December 29, 2020

Friday, December 25, 2020

Deep Learning Hyperparameter Tuning example

https://www.kaggle.com/jamesleslie/titanic-neural-network-for-beginners

titanic-neural-network-for-beginners :

Summary:

Create_model is the key concept in the whole algorithm.

def create_model(lyrs=[8], act='linear', pt='Adam', dr=0.0):

used GridsearchCV to find the best Hyperparameter Tuning.

Hyperparameters: batch_size , epochs , optimizer , layers and drops

Hyperparameter Tuning

Grid searchCV - batch size and epochs

batch_size = [16, 32, 64]

epochs = [50, 100]

Best: 0.822671 using {'batch_size': 32, 'epochs': 50}

===================================================

Grid searchCV - Optimization Algorithm

optimizer = ['SGD', 'RMSprop', 'Adagrad', 'Adadelta', 'Adam', 'Nadam']

Best: 0.822671 using {'opt': 'Adam'}

===================================================

Grid searchCV - Hidden neurons

layers = [[8],[10],[10,5],[12,6],[12,8,4]]

Best: 0.822671 using {'lyrs': [8]}

===================================================

Grid searchCV - Dropout

drops = [0.0, 0.01, 0.05, 0.1, 0.2, 0.5]

Best: 0.824916 using {'dr': 0.2}

===================================================

model = create_model(lyrs=[8], dr=0.2)

training = model.fit(X_train, y_train, epochs=50, batch_size=32,

validation_split=0.2, verbose=0)

Still have few questions --

a. Initial train model given val_acc: 86.53% but where as train model at the end given acc: 83.16%

b. making batch size and epochs as constant values and then the remaining hyperparmaters found the best value

rather than adding one hyperparameter and then another hyperparameter.

Monday, December 14, 2020

Monday, December 7, 2020

Evolution of XGBoost Algorithm from Decision Trees

Credit to

https://towardsdatascience.com/https-medium-com-vishalmorde-xgboost-algorithm-long-she-may-rein-edd9f99be63d

XGBoost is a decision-tree-based ensemble Machine Learning algorithm that uses a gradient boosting framework. In prediction problems involving unstructured data (images, text, etc.) artificial neural networks tend to outperform all other algorithms or frameworks. However, when it comes to small-to-medium structured/tabular data, decision tree based algorithms are considered best-in-class right now.

The algorithm differentiates itself in the following ways:

- A wide range of applications: Can be used to solve regression, classification, ranking, and user-defined prediction problems.

- Portability: Runs smoothly on Windows, Linux, and OS X.

- Languages: Supports all major programming languages including C++, Python, R, Java, Scala, and Julia.

- Cloud Integration: Supports AWS, Azure, and Yarn clusters and works well with Flink, Spark, and other ecosystems.

Imagine that you are a hiring manager interviewing several candidates with excellent qualifications. Each step of the evolution of tree-based algorithms can be viewed as a version of the interview process.

- Decision Tree: Every hiring manager has a set of criteria such as education level, number of years of experience, interview performance. A decision tree is analogous to a hiring manager interviewing candidates based on his or her own criteria.

- Bagging: Now imagine instead of a single interviewer, now there is an interview panel where each interviewer has a vote. Bagging or bootstrap aggregating involves combining inputs from all interviewers for the final decision through a democratic voting process.

- Random Forest: It is a bagging-based algorithm with a key difference wherein only a subset of features is selected at random. In other words, every interviewer will only test the interviewee on certain randomly selected qualifications (e.g. a technical interview for testing programming skills and a behavioral interview for evaluating non-technical skills).

- Boosting: This is an alternative approach where each interviewer alters the evaluation criteria based on feedback from the previous interviewer. This ‘boosts’ the efficiency of the interview process by deploying a more dynamic evaluation process.

- Gradient Boosting: A special case of boosting where errors are minimized by gradient descent algorithm e.g. the strategy consulting firms leverage by using case interviews to weed out less qualified candidates.

- XGBoost: Think of XGBoost as gradient boosting on ‘steroids’ (well it is called ‘Extreme Gradient Boosting’ for a reason!). It is a perfect combination of software and hardware optimization techniques to yield superior results using less computing resources in the shortest amount of time.

System Optimization:

- Parallelization: XGBoost approaches the process of sequential tree building using parallelized implementation. This is possible due to the interchangeable nature of loops used for building base learners; the outer loop that enumerates the leaf nodes of a tree, and the second inner loop that calculates the features. This nesting of loops limits parallelization because without completing the inner loop (more computationally demanding of the two), the outer loop cannot be started. Therefore, to improve run time, the order of loops is interchanged using initialization through a global scan of all instances and sorting using parallel threads. This switch improves algorithmic performance by offsetting any parallelization overheads in computation.

- Tree Pruning: The stopping criterion for tree splitting within GBM framework is greedy in nature and depends on the negative loss criterion at the point of split. XGBoost uses ‘max_depth’ parameter as specified instead of criterion first, and starts pruning trees backward. This ‘depth-first’ approach improves computational performance significantly.

- Hardware Optimization: This algorithm has been designed to make efficient use of hardware resources. This is accomplished by cache awareness by allocating internal buffers in each thread to store gradient statistics. Further enhancements such as ‘out-of-core’ computing optimize available disk space while handling big data-frames that do not fit into memory.

Algorithmic Enhancements:

- Regularization: It penalizes more complex models through both LASSO (L1) and Ridge (L2) regularization to prevent overfitting.

- Sparsity Awareness: XGBoost naturally admits sparse features for inputs by automatically ‘learning’ best missing value depending on training loss and handles different types of sparsity patterns in the data more efficiently.

- Weighted Quantile Sketch: XGBoost employs the distributed weighted Quantile Sketch algorithm to effectively find the optimal split points among weighted datasets.

- Cross-validation: The algorithm comes with built-in cross-validation method at each iteration, taking away the need to explicitly program this search and to specify the exact number of boosting iterations required in a single run.

Machine Learning Validation Techniques

Credit to

https://towardsdatascience.com/validating-your-machine-learning-model-25b4c8643fb7

The following methods for validation will be demonstrated:

- Train/test split

- k-Fold Cross-Validation

- Leave-one-out Cross-Validation

- Leave-one-group-out Cross-Validation

- Nested Cross-Validation

- Time-series Cross-Validation

- Wilcoxon signed-rank test

- McNemar’s test

- 5x2CV paired t-test

- 5x2CV combined F test

Monday, November 30, 2020

Feature Engineering - Numerical Data Scaling

Numerical data can be scaled to ensure proportionate influence on the prediction

Common techniques for scaling

So how do we do it, exactly? How can we align different features into the same scale?

Keep in mind that not all ML algorithms will be sensitive to different scales of inputted features. Here is a collection of commonly used scaling and normalizing transformations that we usually use for data science and ML projects:

- Mean/variance standardization

- MinMax scaling

- Maxabs scaling

- Robust scaling

- Normalizer

In this example, you have the one column called home Type, and three different levels: House, Apartment, and Condo. The data frame has five observations for that particular feature.

With one-hot encoding, you convert this one column of home Type into three columns: a column for House, a column for Apartment, and a column for Condo. You encode each observation with either a 1 or 0: 1 to indicate the home type of that particular observation, or 0 for the other options.

============================================================

Topics related to this subdomain

Here are some topics you may want to study for more in-depth information related to this subdomain:

Scaling

Normalizing

Dimensionality reduction

Date formatting

One-hot encoding

Tuesday, November 24, 2020

Monday, November 16, 2020

UNIVARIATE Analysis

VISUALIZING UNIVARIATE CONTINUOUS DATA

Uni-variate plots are of two types:

1)Enumerative plots and

2)Summary plots

Univariate enumerative Plots :

These plots enumerate/show every observation in data and provide information about the distribution of the observations on a single data variable. We now look at different enumerative plots.

examples:

1. UNIVARIATE SCATTER PLOT

2. LINE PLOT (with markers)

3. STRIP PLOT

4. SWARM PLOT

Uni-variate summary plots :

These plots give a more concise description of the location, dispersion, and distribution of a variable than an enumerative plot. It is not feasible to retrieve every individual data value in a summary plot, but it helps in efficiently representing the whole data from which better conclusions can be made on the entire data set.

5. HISTOGRAMS

6. DENSITY PLOTS

7. RUG PLOTS

8. BOX PLOTS

9. distplot() :

10. VIOLIN PLOTS

VISUALIZING CATEGORICAL VARIABLES :

11. BAR CHART :

12. PIE CHART :

https://www.analyticsvidhya.com/blog/2020/07/univariate-analysis-visualization-with-illustrations-in-python/

Monday, November 9, 2020

HyperParameters for each ML algorithm

In machine learning, a hyperparameter (sometimes called a tuning or training parameter) is defined as any parameter whose value is set/chosen at the onset of the learning process. Whereas other parameter values are computed during training.

K-Nearest Neighbors : K , Leaf_size , Weights and Metric

Decision Trees and Random Forests : N_estimators, Max_depth , Min_samples_split , Min_samples_leaf and Criterion

AdaBoost and Gradient Boost : N_estimators, Learning_rate and Base_estimator (AdaBoost) / Loss (Gradient Boosting)

Support Vector Machines : C, Kernel, and Gamma.

Specifically, I will focus on the hyperparameters that tend to have the greatest effect on the bias-variance tradeoff

However, it is very, very important to keep in mind the bias-variance tradeoff, as well as the tradeoff between computational costs and scoring metrics. Ideally, we want a model with low bias and low variance to limit overall error

https://medium.com/swlh/the-hyperparameter-cheat-sheet-770f1fed32ff

The Hyperparameter Cheat Sheet A quick guide to hyperparameter tuning utilizing Scikit Learn’s GridSearchCV, and the bias/variance trade-off

J.P. Rinfret

---------------------------------------------------------------------------------------------------------------------

=====================================================================

https://towardsdatascience.com/model-parameters-and-hyperparameters-in-machine-learning-what-is-the-difference-702d30970f6

Examples of hyperparameters used in the scikit-learn package

1.Perceptron Classifier

Perceptron(n_iter=40, eta0=0.1, random_state=0)

2. Train, Test Split Estimator

train_test_split( X, y, test_size=0.4, random_state=0)

3. Logistic Regression Classifier

LogisticRegression(C=1000.0, random_state=0)

4. KNN (k-Nearest Neighbors) Classifier

KNeighborsClassifier(n_neighbors=5, p=2, metric='minkowski')

5. Support Vector Machine Classifier

SVC(kernel='linear', C=1.0, random_state=0)

6. Decision Tree Classifier

DecisionTreeClassifier(criterion='entropy',

max_depth=3, random_state=0)

7. Lasso Regression

Lasso(alpha = 0.1)

8. Principal Component Analysis

PCA(n_components = 4)

Wednesday, October 21, 2020

Tensors

-- https://www.youtube.com/watch?v=VL4NC4f6m0w

- Tensors are Multi-Dimensional

- Tensors are higher extensions of matrices.

- Tensors to encode multi-dimensional data. Images - 3 dimensions Vidoes - 4 dimensions

- Tensors to encode higher order relationships and modalities.

- Tensor algebra is richer than matrix algebra. Richer neural network architectures.

- More compact networks/better accuracy.

---------------------------------------------------

- Tensorly: Framework for Tensor Algebra

- Tensors contractions are a core primitive of multilinear algebra.

---------------------------------------------------

Why Tensors

- Statistical reasons

Incorporate higher order relationships in data.

Discover hidden topics (not possible with matrix methods)

- Computational reasons:

Tensor algebra is parallellizable like linear algebra

Faster than other algorithms for LDA.

Flexible: Training and inference decoupled

Guaranteed in theory to converge to global optimum.

---------------------------------------------------

Sunday, September 20, 2020

Feature Store

https://medium.com/data-for-ai/what-is-a-feature-store-for-ml-29b62580af5d

https://hopsworks.readthedocs.io/en/latest/featurestore/guides/featurestore.html

https://hopsworks.readthedocs.io/en/latest/featurestore/guides/featurestore.html#technical-details-on-the-architecture

Saturday, September 19, 2020

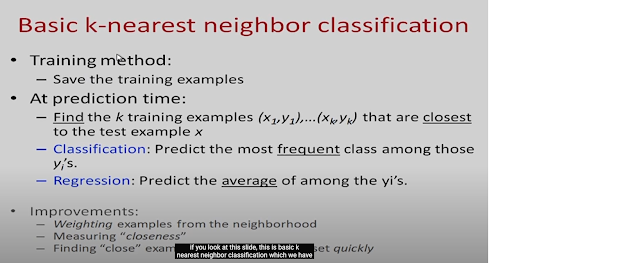

K nearest neighbor classification

KNN

distance methods --- 3

lazy / instance based

K value -- based on even / odd y value

Accuracy Score vs F1 Score.

What should and should not be done when facing an imbalanced classes Problem?

Now, the following are the fundamental metrics for the above data:

1 — Precision: It is implied as the measure of the correctly identified positive cases from all the predicted positive cases. Thus, it is useful when the costs of False Positives is high.

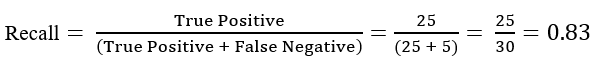

2 — Recall: It is the measure of the correctly identified positive cases from all the actual positive cases. It is important when the cost of False Negatives is high.

3 — Accuracy: One of the more obvious metrics, it is the measure of all the correctly identified cases. It is most used when all the classes are equally important.

Now for our above example, suppose that there only 30 patients who actually have cancer. What if our model identifies 25 of those as having cancer?

The accuracy in this case is = 90% which is a high enough number for the model to be considered as ‘accurate’. However, there are 5 patients who actually have cancer and the model predicted that they don’t have it. Obviously, this is too high a cost. Our model should try to minimize these False Negatives.

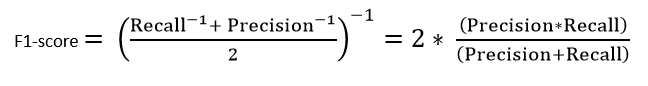

For these cases, we use the F1-score.

4 — F1-score: This is the harmonic mean of Precision and Recall and gives a better measure of the incorrectly classified cases than the Accuracy Metric.

We use the Harmonic Mean since it penalizes the extreme values.

To summarise the differences between the F1-score and the accuracy,

- Accuracy is used when the True Positives and True negatives are more important while F1-score is used when the False Negatives and False Positives are crucial

- Accuracy can be used when the class distribution is similar while F1-score is a better metric when there are imbalanced classes as in the above case.

- In most real-life classification problems, imbalanced class distribution exists and thus F1-score is a better metric to evaluate our model on.

5 Ways to Find Outliers in Your Data

Outliers are data points that are far from other data points. In other words, they’re unusual values in a dataset. Outliers are problematic for many statistical analyses because they can cause tests to either miss significant findings or distort real results.

A single outlier can distort reality. A single value changes the mean height by 0.6m (2 feet) and the standard deviation by a whopping 2.16m (7 feet)!

There are a variety of ways to find outliers. All these methods employ different approaches for finding values that are unusual compared to the rest of the dataset. I’ll start with visual assessments and then move onto more analytical assessments.

Graphing Your Data to Identify Outliers

Boxplots, histograms, and scatterplots can highlight outliers

Z-scores can quantify the unusualness of an observation when your data follow the normal distribution. Z-scores are the number of standard deviations above and below the mean that each value falls. For example, a Z-score of 2 indicates that an observation is two standard deviations above the average while a Z-score of -2 signifies it is two standard deviations below the mean. A Z-score of zero represents a value that equals the mean.